Thoughts on the National AI plan

A couple days ago, I renewed the annual CTP (mandatory insurance) on the car. Yesterday I drove my youngest to dance. She’s old enough to ride up front, but not to drive, her sister is old enough to drive and needed to pass a test to drive under L-plates. We both wore our seat-belts, she played with her phone, I did not. We drove on the left hand side of the road, gave way to cars on a roundabout and I stayed below the 50kmh limit. When a P-plater in a ute cut me up on a roundabout, I jammed on the breaks and honked, he waved his apology and we both continued without further drama.

Exciting stuff. Which brings me to the National AI plan released this week here in Australia. Before I get started, I’ll disclose that since founding EthicCo, we’ve been involved in an exploration into AI and have vested interest in promoting safe and responsible AI adoption patterns.

While there are some positives in the plan, overall it feels like a cop out. Previous industry rumours suggested that we were potentially getting lines in the sand, actual enforceable governance that business could use to shape adoption in a safe way and to measure against to build trust. Somewhere in the last few months, it would appear the government got spooked and took a knee to big tech. It feels like an all too familiar pattern playing out.

Don’t get me wrong, the tech big dogs do have a role to play, but in implementation, not in dictating what our sovereign stance on AI adoption is. It should also be noted that many of these actors pay little or no tax in our country, we have no obligation to subjugate ourselves to their whims. Don’t fear idle threats either, US companies won’t bail, there’s too much money to be made in Australia (building DCs and flogging software) and they don’t want to hand initiative to Chinese competitors. They will survive, we just don’t need their moral and legal guidance, thanks anyway.

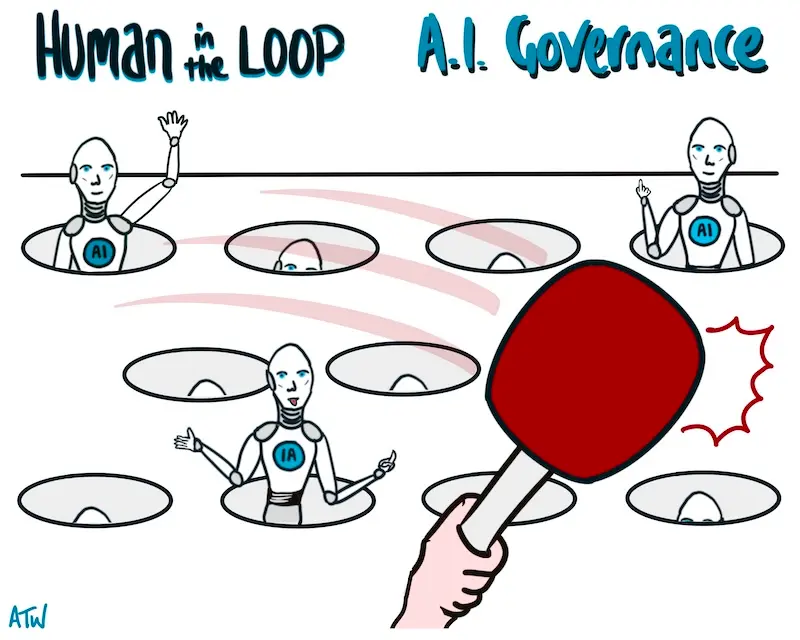

There’s been commentary around how pointless it is trying govern something moving at breakneck speed, laws are out of date before they are dry. This is valid but it doesn’t mean we should give up and operate totally unregulated. What we need are dynamic mechanisms for governance, they may always be playing whack-a-mole but better 3 months behind, than 3 decades. How about we give the newly created AI Safety Institute (AISI) powers to create voluntary guidelines, which then get legislated after a few months if proven effective? Countries like Taiwan have shown that digital, citizen/expert driven leadership can shape policy making in near real time with superior outcomes. It’s surely not beyond us…

The government has also decided against AI specific legislation, preferring to leverage existing broad local and global frameworks. This seems reasonable on the surface but it’s inadequate. AI is transformative, it’s changing our world in ways we don’t yet fully grasp, it needs clear and direct guidance, it won’t fit naturally within existing legislation. We could flip it around, define the AI rules and have existing legislation pick up the slack. Sensible, AI specific legislation is not anti-business, it’s an enabler for safe adoption. Australia small-medium businesses need this.

The most frustrating thing about this beige approach is that it’s not a sensible middle line, it’s a free for all, a race to the bottom. It is also a sad repeat of the farcical social media roll out, even including some of the very same bad actors. The tech bros are pretty much the last people on the planet we should entrust our AI regulation to.

Rather than just gripe about tech oligarchs, state capture and lack of leadership in government, I’ll try and offer something a bit more constructive. I’m a big picture person (aka not great with details) and I’m fascinated by the interactions between complex adaptive systems, so I’ll apply that lens to this challenge.

AI is emergent, nobody has control of where this goes, no map exists, every action taken (or not) has potential implications we cannot accurately predict. We are where we are, geopolitical tension, ecological breakdown, colonial power structures, mindless consumerism, exhausted / unequal / divided society …. Any way forward starts from here and now.

What we can do is define the constraints (non-negotioables), I’ll throw a few out there (but there are plenty others we could discuss and agree as a society)

- Australia should define our own adoption principles and enforce these

- AI should be in service of humanity (not the other way round)

- AI should be ecologically sustainable (so built out in line with renewables)

- Australia should have it’s own sovereign ecosystem

- AI should be used where it makes sense, not just because it is shiny

- AI should be fair, accessible to all

- AI should not be used for military and other nefarious purposes

From these umbrella values, we can play with actual measurable rules (eg. ban deep fakes, no racial profiling etc). We won’t always get these right first time and the sands will shift, hence the need for agile regulation. As we explore/evolve this tech we should always be measuring and mapping against these principles, if something moves us forward towards them, do more of it, if not, try something else. This is the proven method for navigating complexity. It would obviously require some form of judgement (enter AISI again?).

Which takes me back to my family taxi service. We have laws for a reason. These aren’t always in line with our own individual preferences, they become outdated and need revision, but they provide a common understanding for acceptable behaviour. AI needs a speed limit, it needs defined non-negotiables , it needs to have dedicated regulations that are agile enough to shift (eg. No mobile phones while driving wasn’t a consideration when I passed my test). These rules will not please everyone. These rules need to be enforceable or they are meaningless.

AI is a tool, a technology, like a plough, like fire. We learn early that fire is needs to be handled with care, so does AI. The National AI plan is a start but it passes on ownership and courage. It requires iteration.

Maybe I’m being unfair, maybe this is just another example of small government, being frugal with our tax dollars… Light touch may make sense in settled systems with well-defined boundaries, but not in an existential crisis. That requires stronger leadership, it requires politicians standing up to be counted, even if that carries potential risks at the ballet box. This government has made a habit of presenting a small target, be less dysfunctional than the coalition and ‘she’ll be right’. This requires a different approach. AI has potential to fundamentally reshape society in good or bad ways. It’s up to us to chose a path, but that takes proactive leadership and courage, not sitting on hands for fear of upsetting some dude in a bunker (or on a rocket).

Get up off those hands

Ewan